Implementing a Real-time Data Streaming Solution with Apache Kafka & SpringBoot

Exploring and Implementing a Real-time Data Streaming Solution with Apache Kafka

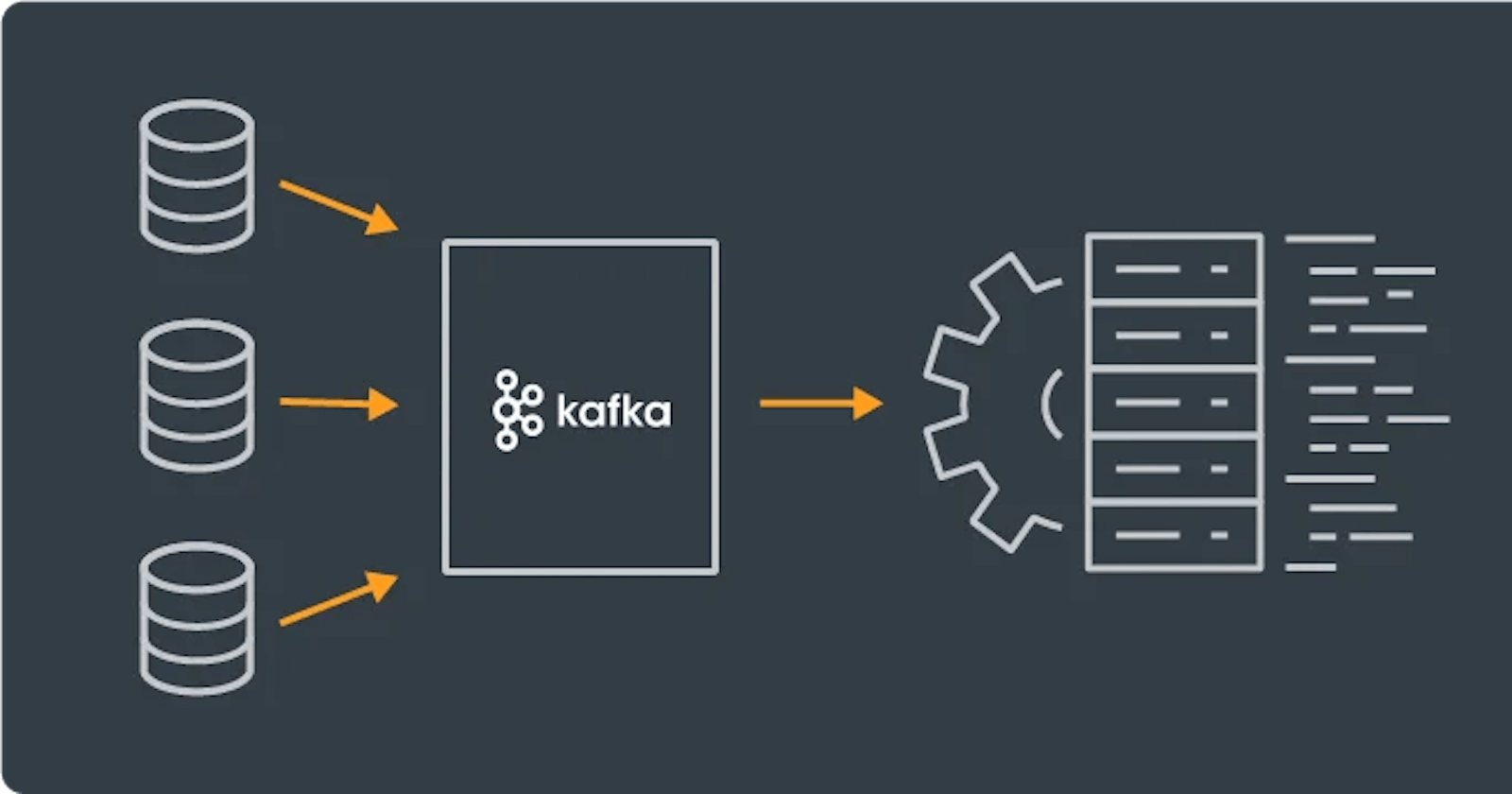

Real-time data streaming has become increasingly crucial in today's data-driven world, enabling businesses to react to events instantaneously, make timely decisions, and gain valuable insights from streaming data. Apache Kafka, with its distributed architecture and high-throughput capabilities, is a leading platform for building real-time data streaming solutions. In this article, we'll explore the concept of real-time data streaming and guide you through the implementation of a basic streaming solution using Apache Kafka.

Understanding Real-time Data Streaming

Real-time data streaming involves the continuous ingestion, processing, and analysis of data as it flows through a system. Unlike traditional batch processing, which operates on static datasets, real-time streaming processes data incrementally, enabling immediate actions and insights. Real-time streaming is essential in various use cases such as fraud detection, monitoring, recommendation systems, and IoT applications.

Implementing a Real-time Data Streaming Solution with Kafka

Now, let's dive into implementing a basic real-time data streaming solution using Apache Kafka. We'll cover the following steps:

- Setup Kafka Cluster: Install and configure Apache Kafka on your system.

- Create Kafka Producer: Develop a Kafka producer application to generate and publish streaming data.

- Define Kafka Topics: Define Kafka topics to organize and manage the streaming data.

- Implement Kafka Consumer: Develop a Kafka consumer application to consume and process the streaming data.

- Run the Streaming Solution: Start the Kafka producer and consumer applications to stream and process data in real-time.

Step 1: Setup Kafka Cluster

Download and install Apache Kafka on your system. Follow the official Kafka Quickstart Guide for detailed installation instructions or use our Step-by-Step guide.

Step 2: Create Kafka Producer

Develop a Kafka producer application in your preferred programming language (e.g., Java, Python) to generate and publish streaming data. Use Kafka producer libraries to interact with Kafka brokers and publish messages to Kafka topics.

Kafka producer implemented in Java using Spring Boot:

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.stereotype.Component;

@Component

public class KafkaProducer {

private static final String TOPIC = "my-topic";

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

public void sendMessage(String message) {

System.out.println(String.format("Producing message: %s", message));

kafkaTemplate.send(TOPIC, message);

}

}

In this example, we create a Spring component called KafkaProducer responsible for sending messages to a Kafka topic named "my-topic". We autowire a KafkaTemplate provided by Spring Kafka, which simplifies the process of sending messages to Kafka.

To use this producer, you'll need to configure Spring Boot to connect to your Kafka broker(s) in the application.properties or application.yml file:

spring.kafka.producer.bootstrap-servers=localhost:9092

Now, you can inject the KafkaProducer into your Spring Boot application components or controllers and use it to send messages to Kafka. For example:

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class MessageController {

@Autowired

private KafkaProducer kafkaProducer;

@PostMapping("/send-message")

public void sendMessage(@RequestBody String message) {

kafkaProducer.sendMessage(message);

}

}

This controller exposes an endpoint /send-message that accepts a POST request with a message payload. Upon receiving the request, it uses the KafkaProducer to send the message to the Kafka topic.

Remember to include the necessary dependencies in your pom.xml file if you're using Maven:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

With this setup, your Spring Boot application can act as a Kafka producer, sending messages to the specified Kafka topic.

Step 3: Define Kafka Topics

Define Kafka topics to organize and partition the streaming data. Topics act as logical channels where data is published by producers and consumed by consumers. Create topics using the Kafka command-line tool (kafka-topics.sh).

bin/kafka-topics.sh --create --topic my-topic --bootstrap-server localhost:9092 --partitions 3 --replication-factor 1

Step 4: Implement Kafka Consumer

Develop a Kafka consumer application to subscribe to Kafka topics, consume streaming data, and perform real-time processing or analysis. Use Kafka consumer libraries to fetch messages from Kafka topics and process them accordingly.

Here's an example of a Kafka consumer implemented in Java using Spring Boot:

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Component;

@Component

public class KafkaConsumer {

private static final String TOPIC = "my-topic";

@KafkaListener(topics = TOPIC, groupId = "my-group")

public void consume(ConsumerRecord<String, String> record) {

System.out.println(String.format("Consumed message: key=%s, value=%s", record.key(), record.value()));

// Add your custom processing logic here

}

}

In this example, we create a Spring component called KafkaConsumer annotated with @Component. We use the @KafkaListener annotation to specify the Kafka topic ("my-topic") and consumer group ("my-group") that this consumer will listen to. The consume method is invoked whenever a message is received from the specified topic.

To use this consumer, you'll need to configure Spring Boot to connect to your Kafka broker(s) in the application.properties or application.yml file:

spring.kafka.consumer.bootstrap-servers=localhost:9092

spring.kafka.consumer.group-id=my-group

Now, you can start your Spring Boot application, and the KafkaConsumer will automatically listen for messages from the Kafka topic "my-topic". Whenever a message is published to the topic, the consume method will be invoked, and you can add your custom processing logic within this method.

Remember to include the necessary dependencies in your pom.xml file if you're using Maven:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

With this setup, your Spring Boot application can act as a Kafka consumer, listening for messages from the specified Kafka topic.

Step 5: Run the Streaming Solution

Start the Kafka producer and consumer applications to stream and process data in real-time. Monitor the Kafka cluster, producer, and consumer logs for any errors or issues.

Let's craft an example of a request payload for the Spring Boot application and demonstrate how to execute the payload using a REST client like cURL or Postman.

Example Request Payload

Suppose we want to send a JSON payload containing a message to be published to the Kafka topic. Here's an example of the request payload:

{

"message": "Hello Kafka!"

}

Executing the Payload

You can use cURL or any REST client tool like Postman to execute the payload. Below are examples of how to execute the payload using cURL and Postman:

Using cURL

curl -X POST \

http://localhost:8080/send-message \

-H 'Content-Type: application/json' \

-d '{

"message": "Hello Kafka!"

}'

Replace http://localhost:8080/send-message with the appropriate endpoint of your Spring Boot application.

Using Postman

- Open Postman and create a new request.

- Set the request type to

POST. - Enter the request URL (

http://localhost:8080/send-message). - Set the request body to

rawand selectJSONfrom the dropdown. - Paste the JSON payload into the body section.

- Click on the

Sendbutton to execute the request.

With this setup, when you execute the payload using cURL or Postman, the Spring Boot application's controller will receive the request, extract the message from the payload, and send it to the Kafka topic using the Kafka producer.

Make sure your Spring Boot application is running and listening for requests on the specified endpoint (/send-message). You should see the message being printed in the application's console log, indicating that it has been successfully sent to the Kafka topic.

Conclusion

Apache Kafka provides a robust platform for building real-time data streaming solutions, enabling businesses to process, analyze, and react to streaming data in real-time. By following the steps outlined in this article, you can implement a basic real-time data streaming solution using Apache Kafka and lay the foundation for more advanced streaming applications. Experiment with different use cases, configurations, and Kafka features to harness the full potential of real-time data streaming.