Utilizing AWS S3 Multipart Uploads: A Beginner's Guide

Amazon Simple Storage Service (S3) provides a robust solution for storing and retrieving data at scale. When dealing with large files, AWS S3 Multipart Uploads offer an efficient mechanism for seamless and reliable uploads. This comprehensive guide will walk you through the process of using AWS S3 Multipart Uploads, empowering you to manage large objects effortlessly.

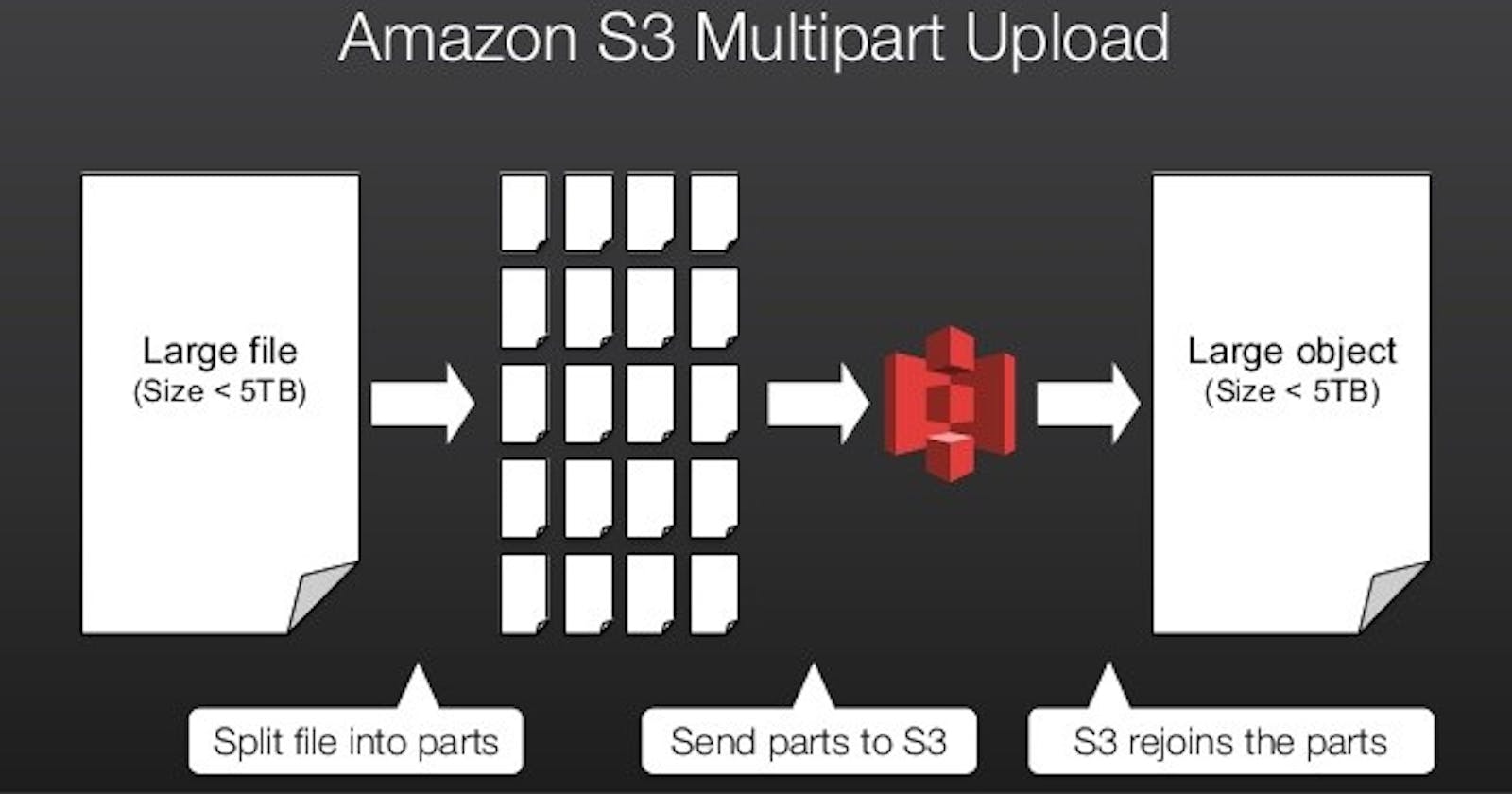

Understanding AWS S3 Multipart Uploads

What are Multipart Uploads?

AWS S3 Multipart Uploads enable the efficient uploading of large objects by breaking them into smaller parts. This approach provides several benefits, including enhanced reliability, parallelization of uploads, and the ability to pause and resume the upload process.

Getting Started

Prerequisites

Before initiating a multipart upload, ensure you have the following in place:

- An AWS account with access to the S3 service.

- AWS Command Line Interface (CLI) installed and configured on your local machine.

Step-by-Step Guide

Step 1: Initiate Multipart Upload

Start by initiating a multipart upload using the AWS CLI. Replace YOUR_BUCKET_NAME and YOUR_OBJECT_KEY with your specific bucket and object key.

aws s3api create-multipart-upload --bucket YOUR_BUCKET_NAME --key YOUR_OBJECT_KEY

Save the returned UploadId as it will be required for subsequent steps.

Step 2: Upload Parts

Upload each part of the object independently. Use the UploadId obtained in the previous step and assign a unique PartNumber for each part. Replace PART_NUMBER and FILE_TO_UPLOAD accordingly.

aws s3api upload-part --bucket YOUR_BUCKET_NAME --key YOUR_OBJECT_KEY --part-number PART_NUMBER --upload-id YOUR_UPLOAD_ID --body FILE_TO_UPLOAD

Repeat this step for each part of your object.

Step 3: Complete Multipart Upload

After uploading all parts, complete the multipart upload. Replace YOUR_UPLOAD_ID, PART_ETAG, and PART_NUMBER with the appropriate values from the previous steps.

aws s3api complete-multipart-upload --bucket YOUR_BUCKET_NAME --key YOUR_OBJECT_KEY --upload-id YOUR_UPLOAD_ID --multipart-upload 'Parts=[{ETag="PART_ETAG",PartNumber=PART_NUMBER}, ...]'

Best Practices and Tips

Part Size: Choose an optimal part size based on your object's size and network conditions. AWS recommends parts between 5 MB and 5 GB.

Error Handling: Implement robust error handling and consider implementing retries for failed uploads.

Monitoring: Leverage AWS CloudWatch to monitor and log multipart upload progress.

Abort Multipart Uploads: If needed, you can abort a multipart upload using

aws s3api abort-multipart-upload.

Security Best Practices

When working with AWS S3, it's crucial to implement robust security practices to safeguard your data. Consider the following best practices:

1. Bucket Policies and Access Control:

- Utilize AWS Identity and Access Management (IAM) to manage access to your S3 buckets.

- Define granular bucket policies to control permissions for different users and roles.

2. Encryption:

- Enable server-side encryption for data at rest using AWS Key Management Service (KMS) or Amazon S3-managed keys (SSE-S3).

- Implement client-side encryption for an additional layer of security.

3. Access Logging:

- Enable access logging to track requests made to your S3 buckets. This provides visibility into who is accessing your data and when.

4. Versioning:

- Enable versioning for your S3 buckets to maintain a history of object modifications. This can be invaluable for data recovery and compliance.

5. Secure Data Transfers:

- Use HTTPS to encrypt data in transit when interacting with S3. Avoid using unencrypted HTTP.

6. Bucket Naming and DNS Considerations:

- Follow best practices for naming your S3 buckets. Avoid using sensitive information and consider DNS compatibility.

7. IAM Roles and Policies:

- Assign IAM roles with the principle of least privilege. Only grant the permissions necessary for specific tasks.

8. Cross-Origin Resource Sharing (CORS):

- Configure CORS settings to control which domains can access resources in your S3 buckets. This helps prevent unauthorized access from web applications.

9. Regular Security Audits:

- Conduct regular security audits to review and update your S3 security settings. Stay informed about changes in AWS security features.

Note: Adjust the values in the commands and configurations according to your specific use case and AWS environment.

Conclusion

AWS S3 Multipart Uploads offer a powerful and efficient solution for handling large objects in the cloud. By breaking down uploads into smaller parts, you can enhance reliability, parallelize the process, and achieve optimal performance. Follow this step-by-step guide to harness the power of AWS S3 Multipart Uploads and streamline your large file uploads in the cloud.